Changing Spatial Boundaries.

- by Junxi Peng

- in Thesis

- posted September 18, 2017

1. Introduction.

“Augmented reality will not only change the way architects work but also how we use the space,” says architect Greg Lynn (2016). The physical space can be transformed by overlaying digital information provided by a Head Mounted Display (HMD), which seems to have set a method gradually rising in popularity. With the influences of technologies, our living environment might soon become more adaptable for the users, since the added layer of information might offer a series of customizable and alterable spatial experience.

There are a lot of terms that define the research of interaction within realms, which may range from the Virtual to the Mixed, the Amplified and the Augmented Reality. All these ‘Realities’ share a common philosophy driven by the ways to enrich the experiences of perception of the artificial when it interacts with the physical, everyday world of human activities (Wang and Schnabel, 2010). This thesis is driven by the notion of Mixed Reality (MR) that is deployed to the new artificial environment. This intersection of real and virtual environments is defined as a Mixed Environment (ME).

Fig 1. Order of reality concepts ranging from reality (left) to virtuality (right)

There are three fields that can be changed by MR and these are, the display of information, the creation of digital objects, and the further enhancement of existing ones (Zuckerberg, Facebook F8 conference, 2017). More specifically, the information display method is for displaying the data onto a certain object, such as projecting navigation signals onto the street. The creation of digital objects allows to further interact with them while the enhancement of existing objects allows people to use existing environments in a different way. For example, one could use an ordinary physical object to draw 3D objects in a virtual world (google Tile brush) or even use MR to create an alternative interior design for one’s house.

Having an architectural design context, this thesis wants to research how vison manipulation possibility provided by MR can change the perception of the space and how this kind of change can affect the behavior of humans in the MR. As the natural locomotion in a virtual environment has been a heated topic for a long time. How to use vison manipulation to create a better natural locomotion becomes an interesting question.

The thesis asks how to procedurally generate the perception of a spatially enlarged walking experience while providing the passive haptics in mixed reality. The passive haptic is a haptic feedback provided by the static physical object through the virtual proxy in MR. In order to answer the question, one should first understand how humans perceive the physical space and what the role of the movement in this perceptive process is. This thesis will present a reinvented and redirected walk algorithm as the virtual enlargement process and the method to create MR environments that can be offered to and deployed by other developers, who in their turn can alter those and produce a new series of MR experiences.

The word ‘Simulacrum’ describes how one can ignore the reliable input of his senses and resort to the constructs of language and reason, arriving as such at a distorted copy of reality (Artandpopularculture, 2014). Interestingly, we have no direct access to our physical world, other than through our senses (Berkeley, 2009) and our brains are highly elastic and programmable (Begley, 2009). It is proven that humans mainly perceive space by visual signals and through the locomotion sensors or proprioceptors in their bodies (Klatzky et al., 1998). Theoretically, our brains can be hacked by manipulating the visual images that may change our perception of the current space. Such an observation allows one to consider the redirecting of the perception of space as an opportunity for research. With a specific interest in architecture and scale, this thesis focuses on challenging human perception to the extent that allows users of the studied space to think that the ME is much larger than the Physical Environment (PE).

The deployed methodology draws upon technologies for precise movement tracking, 3D environment rendering, simultaneous localization, and the theories of mapping and redirected walking. As such, the system it presents uses PE as the template to reconstruct a new environment that extends a walkable area of the physical space and constructs a new virtual environment (VE) that allows users to walk freely in a much larger virtual space while providing a tactile experience. The latter adds a level of complexity as it allows them to interact with the physical boundaries, such as walls and virtual walls, while they are being redirected by the method of dislocating their vision.

The key contributions of this thesis are the following:

- It aims at providing the first redirected walking algorithm that can be applied to any size of physical space and can offer random routing possibilities in an expanded and tactile virtual environment. The algorithm has been reinvented from point to point path redirection to area mapping spatial reconstruction. Users can walk freely without any reset (rotation on the spot) or use of any transport space.

- Moreover, the supporting research provides a procedural process of generating the VE model that includes the steps of space scanning, model rebuilding, algorithm calculation, VE model generation/integration, and it can be easily deployed on ME.

- Finally, it leads to the development of a toolkit and detailed guide for generating the redirected walking environment, which any developer can easily download as a package and follow the instructions to procedurally generate the VE base on the physical space of their choice. Meanwhile, users can create their own MR experiences that could greatly benefit MR gaming and storytelling application scenarios, as in the demo scene of the development kit ‘Simulacrum 2200’ that will be explained in detail later in the thesis.

2. Related Technologies and Theories.

The challenge of creating a redirected walking VE requires a broad knowledge of technologies and a solid theoretical background. As such, the most directly related concepts and technological tools will be briefly introduced, before moving to the detailed description of the method, design, and implications of the Virtual Environment generation algorithm and system.

2.1 3D scan and reconstruction.

To use the physical space as a template to generate the VE, the system needs to be able to recognize and ‘read’ space. Nowadays, there are many tools allowing us to gather the required data. Among those, 3D laser scanning to 2D graphics composing technology offers great precision. The 3D laser scanning can provide data which can limit the deviation about 1mm and the range of scan can be up to 350 meters (FARO Focus 350). The downside of the equipment is that it is relatively more expensive than other methods and it takes a longer time to scan one scene. Low-cost range sensors, such as the Microsoft Kinect, has provided real-time robust 3D scanning (depth range up to 4.5m) Recently, Google released their Project Tango-equipped phone Phab2, which can also be used as a scanner that allows for 3D reconstruction. New approaches use 2D images to calculate the depth maps. Technically all the aforementioned methods can be used and get integrated into our system.

2.2 Adaptive Systems.

The content of the constructed virtual environment will be provided to the user through a head-mounted display (HMD). This equipment that has not been affordable for a long time, is now broadly available in the consumer market. Among those, HTC Vive and Oculus Rift are both suitable for the room-scale indoor tracking, with HTC Vive providing object tracker for a developer to track the physical object in the virtual world. This equipment enables this project to test the algorithm in the real world and employ it to study human vision and behavior.

2.3 Real walking in VR.

Walking in VE is one of the most intuitive ways to experience the MR, the foundation for VE and PE synchronization. At the moment, there are four available ways of moving in VR; by using the controller to move virtually or by mechanically making our body think we are walking. On the other hand, a tracking system can be used to track the user movement or even additionally combine with redirected walking algorithm to enlarge the perception of the space. To achieve this, different trackers are being used to capture the movement of the user in the physical space.

Fig 2. Comparison Among Different Techniques For Movement In VR.

Movement detection is a standard feature in most VR equipment. For example, HTC Vive can use 2 base stations to track a maximum size of 4m x 8m space. There are other solutions, like using an HDM-binding depth sensor that combines area learning algorithms to detect the user movement directly, while allowing users to have 6 degrees of freedom in a limitless physical space. Because this technology is still rather unreliable, the thesis uses Tango in the early experimental steps and HTC Vive base station to track users’ movement for the demo experience.

2.4 Redirected walking theory.

The realism of locomotion for a large area has been a challenge to achieve for a long time. Redirected walking theory was firstly introduced by Sharif Razzaque, Zachariah Kohn and Mary C. Whitton in 2001. By imperceptibly rotating the virtual scene about the user, the rotation and change of the scale cause the user to walk continually towards the furthest path without noticing the rotation. (Razzaque, Kohn and Whitton, 2001). This method has been latterly developed by USC with the redirected toolkit (Azmandian et al., 2016). All these algorithms are using visual dislocation and on spot rotation of reset to navigate the user from point A to point B. This thesis will introduce a new algorithm providing both free-walk redirected perception and passive haptic design.

2.5 Passive Haptics. The design principles.

The concept of passive haptics means users can receive haptic feedback from touching a physical object that is a virtual object proxy through the virtual environment. This can help users avoid to pass through objects in VEs that would make the experience unnatural (Lindeman et al., 1999). It has been proven that there are certain benefits from the physical replication of objects in the immersive virtual environment (Insko 2001). The algorithm this thesis suggests will automatically synchronize the boundary between physical and virtual space. Users receive full haptic feedback when touching the wall in the VE and they can also interact with the physical object through the virtual representative.

2.6 Human spatial memory and self-position in virtual locomotion theory.

Human’s spatial cognition and self-position mainly depend on visual imagine , vestibular , and proprioceptive cues (Frissen and Campos, 2011). Updating position and orientation when encoding from vision signals, appears to be considerably easier than updating from proprioceptive cues. (Bellan et al., 2015). Visual landmarks are important for the human spatial memory. Users need visual landmarks or geometric cues to better known their position in the virtual space (Hartley, Trinkler and Burgess, 2004). That is to say that the spatial and interaction design is critically important for both self-positioning and spatial memory. They are also the key elements of the identity of each virtual space and the overall enlargement of redirected walking.

3. Redirected walking. Algorithm for walking freely with passive haptics.

This section describes the new redirected walking algorithm in more detail. Recent technologies, to precisely track the movement of users in a large scale of space, require a large number of cameras and extensive camera adjustment and calibration, which is hard to deploy in everyday life. Moreover, for most of the VR consumers, it is rather hard to find a large space for experiencing the VR. For this reason, we need to find a way to compress the human movement while synchronizing the VE and PE and providing a believable walking experience of an enlarged VE in a limited PE.

Redirected walking provides subtle discrepancies between the user’s motion in the real world, and what is perceived in the virtual world. This can mislead the user to walk on a different track between the real and the virtual world. It gives us the abilities to compress the human motion without being noticed by users and contain the movement in a small tracked space. This algorithm is possibly the first redirected walking that can allow the user to walk freely in an infinite space while providing the passive haptic sense of the space boundary in a limited physical space. The detail of this algorithm will be explained as follows.

3.1 Gains.

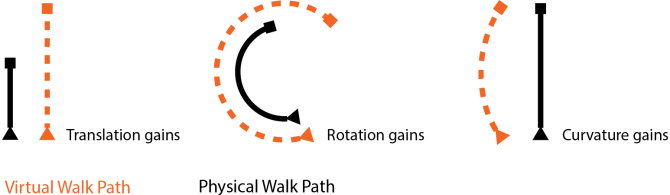

As the original redirected walking algorithm points out, manipulating the dislocation between the real and virtual motions is achieved by using gains in the HMD. There are three different gains is being used in this algorithm: translation, rotation and, curvature gains. These dislocations are carefully controlled by the calculation which make them unnoticeable.

Fig 3. Different Gains Used In Redirected Walking Techniques.

Translation gains scale the user’s translation so that the speed of the movement can be increased or decreased in the virtual world. The rotation gain is the dislocation of a virtual head rotation and the actual physical rotation of the user. And the curvature gains involve the dislocation of both rotation and scale of the vision. When the user walks towards a point in the virtual world, as a result, in the physical world he/she walks on a curved path. (Azmandian et al., 2016)

3.2 Area mapping.

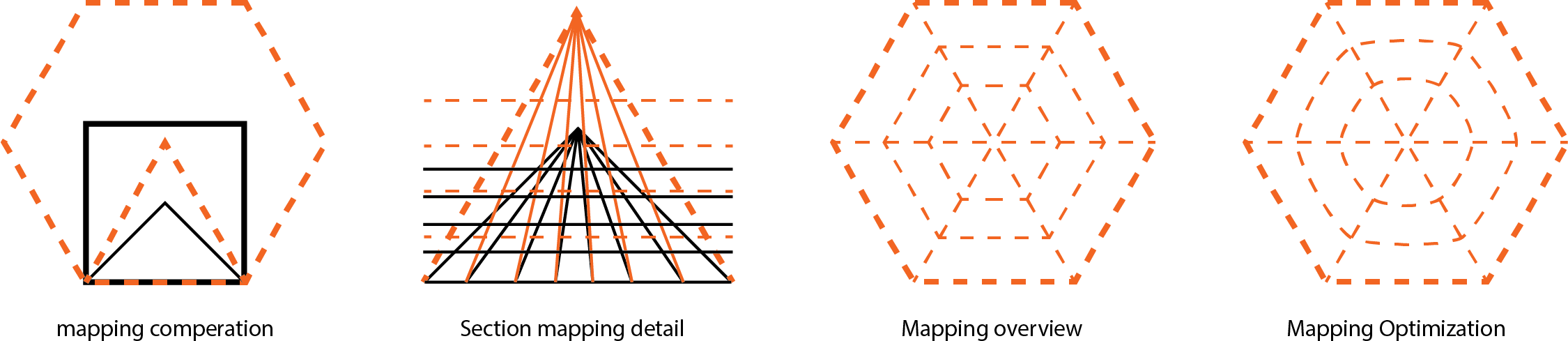

To give the user the ability to walk freely, this thesis reinvents the original redirected walking algorithm from point to point walking to area mapping. It develops a comprehensive algorithm that maps the virtual to the physical walkable area. The expansion is based on consistently changing the viewer’s walking path by dislocating the orientation and the transition scale of the walk based on the map.

Area mapping creates a smooth walking experience within the conditions of the enlargement. This algorithm has deeply focused on how to smoothen the walking experience in real-time at each position. (See “Problem Solving: During Mixed-Reality Project Development Process”, by Jessien Huang for further mathematical details.) Moreover, area mapping can even the dislocation between physical and virtual space into the whole walking area so that users will not experience any hard rotation to reset the redirection. As such, the overall walking experience will be, experience-wise, smoother and, technically, more consistent.

Fig 4. Area mapping process demonstration.

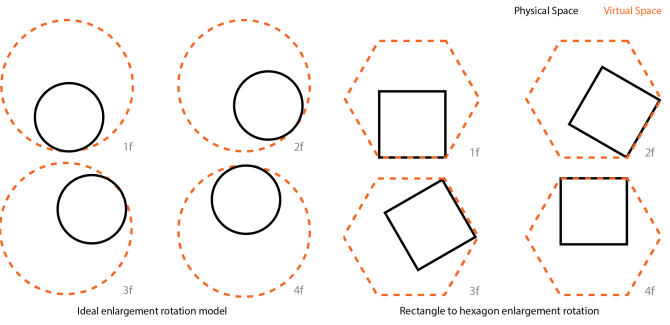

3.3 Real-time walking and redirecting.

To coordinate and measure the dislocation of VE and PE, we define both the centre point of VE as the virtual environment centre (VECP) and the physical space centre point (PSCP). The redirection model can be demonstrated as two circle space rotated one of the other. The PSCP rotates around VECP. To specify this, we demonstrate the idea using a rectangle that represents the physical space and a hexagon to represent the virtual environment. The gold shows where the common lines of the two shapes match. The shape rolling model ensures the synchronization of the space boundary.

Fig 5. The rotation model of real-time walking and redirecting

The combination of the gains, area mapping, real-time walking and, redirecting provides a complete solution that allows for the random walking redirected experience with passive haptics to take place in a rather effective way.

4. VE Geometry Generation Process Overview.

This project aims to be deployed in different application scenarios, such as an exhibition, game, scene graph, in education, remote tourism, and remote official businesses and so on. For example, we could use redirected real walking in VR to replace the virtual move that requires the use of the controller in first person gaming, and to improve as such, the immersive experience offered. Therefore, the standardized VE generation process could become important in such a context.

The environment generation is one of the most important software procedures of building an MR experience. This section will introduce the specific process we created to apply the redirected walking into a physical space based on a generated virtual environment. The work presented in this paper attempts to simplify the process of creating the expanded walking MEs, by using the space scan data to calculate the size of physical space and to automatically generate the VE using the integrated redirected walking algorithm.

Five different steps are applied to enlarge the cognition of the space and generate the corresponding VE; the 3D scanning of the chosen space to get the precise floor plan; the division of the physical plan into a suitable size for the algorithm; the selection of the proper parameter of the enlargement according to the application scenarios; the generation of the VE with the automatic generation system and finally, the analysis and debug.

4.1 Space Scan.

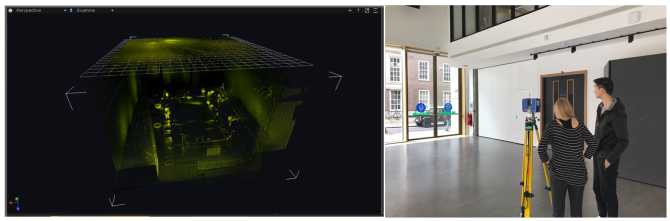

The process of enlargement of all spaces is based on scenes of the physical space, so the premise of applying this algorithm is that we must get the exact plan and add information to the actual space. Here we choose to use 3D scanning as a method to acquire the accurate scale of the space, and the information for model building so that the virtual and physical spaces can be synchronized. The two common methods of spatial scanning is low- and high-resolution scan.

4.1.1 Low-resolution scan.

Using Tango to generate the space model is the easiest way to get a proper floor plan. All the scanning process can be done via the phone application. Firstly, this thesis use Project Tango to scan and collect the point cloud data to build a 3D map of the real environment. Then, this thesis uses software to analyze the point cloud data and simplify it into a low-poly model which can be used to calculate the floor plan of the space.

4.1.2 High-resolution scan.

The high-resolution laser scanner requires an editing process on a computer, using point cloud editor and a 3D model software, such as Rhinoceros. A 3D laser scanner like FARO needs to configure the scan with FARO scene software. After the scan, the point cloud information is edited with Point Editor that removes all unnecessary elements. The edited point cloud needs to get reimported into Rhino for the point cloud to get reconstructed. This last step might include color correction and detail modification. At the end of this process, the floor plan for the algorithm generation is ready to be imported as a model into Unity.

Fig 6. Using the FARO equipment to make the high-resolution scan.

4.2 Redirected walking mapping.

After having proceeded with the scanning of the physical space and having the redirected walking algorithm as our foundation, we can now focus on how to reconstruct the model into a perceptively larger walkable space. As for the consumer-grade, VR systems like the HTC Vive and Oculus Rift can provide a reliable low-cost room-scale tracking solution that allows the viewer to walk in a 4 * 8 meters physical space, as the one we deployed in our demo for generation and further expansion of the physical space. Technically, one can say that this system could be applied to any sized, square space.

4.2.1 Space division.

For the algorithm to be deployed for each floor plan, it needs to be divided into two “Amplifying Rooms” and many “Operating Rooms”. The Amplifying Rooms are two separate spaces designed for applying the enlargement algorithm. This sort of rooms will be the ones to get enlarged and synchronized at each wall. Users can switch between these two rooms and transport themselves from one virtual room to another.

On the other hand, the Operating Room is the space for operating specific interactions. That is to say that the scale of space and users’ visual rotation shall not be changed. In this case, this space acts as the equivalent to a real scaled space for users to interact with. The Operating Rooms will be linked by the Amplifying Rooms, covering two important kinds of spaces that can meet some of the users’ diverse needs.

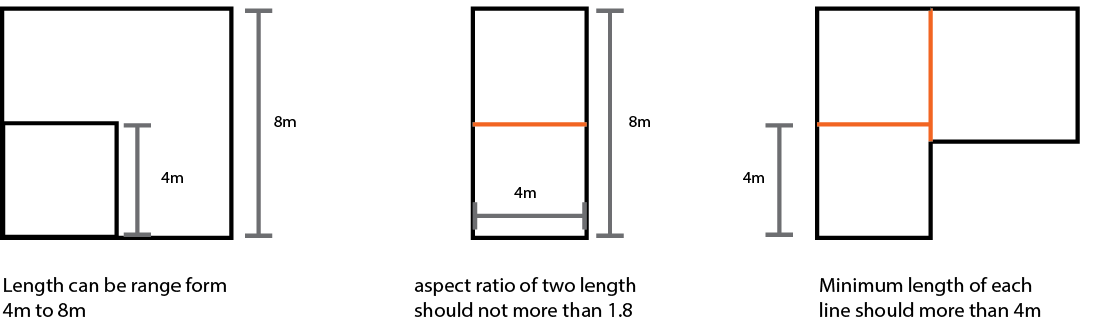

All spaces which are larger than 4m x 4m should be divided and re-designed with the minimum length of every Amplifying Room to be 4m, the aspect ratio of each room not to exceed the 1.8, and every space with an aspect ratio more than 1.8 to be better divided into two virtual rooms. If the circumference of the divided room is less than 4m, it is better to use the room as an Operation Room. In theory, an Operating Room has no limitations regarding its scale and proportions, but it must be linked to an Amplifying Room, allowing users to enter seamlessly.

Fig 7. Space divison examples.

4.2.2 Space Enlargement.

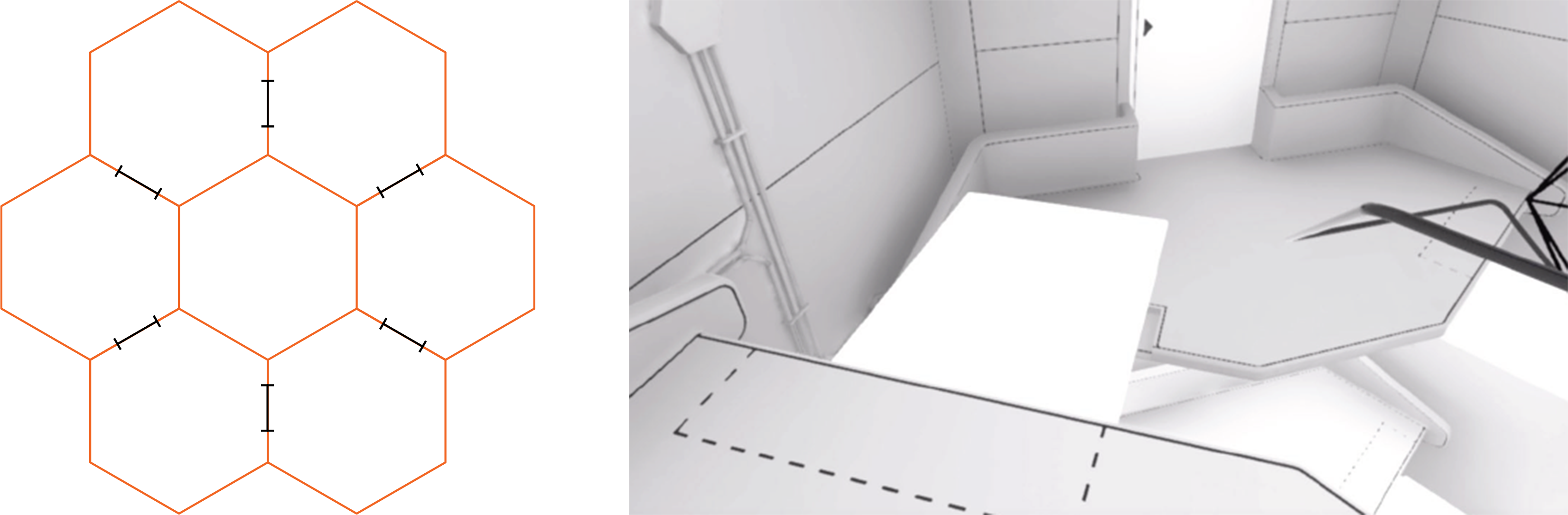

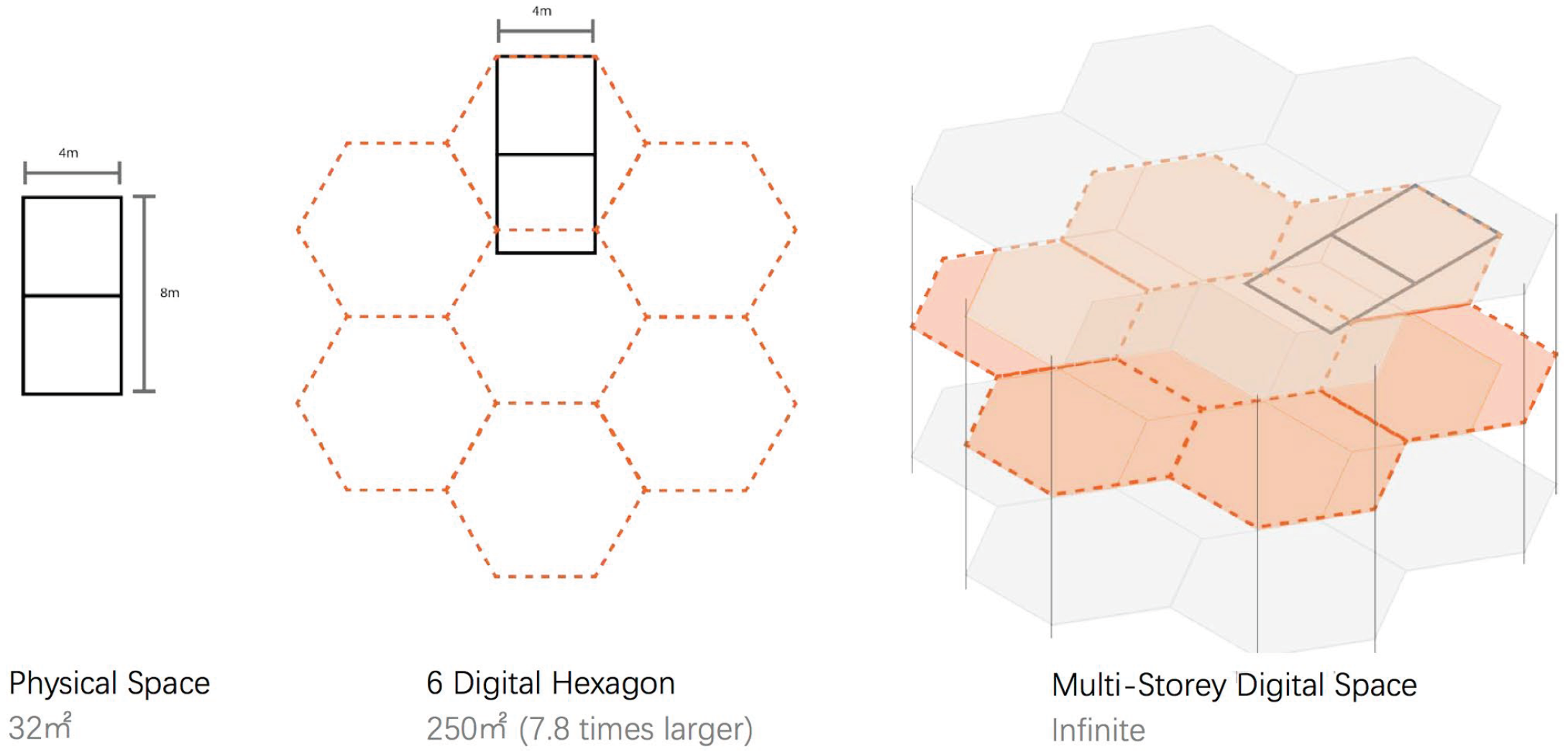

The first step of this process is to enlarge one Amplifying Room by increasing the quality of its sides. Every Amplifying Room will be enlarged by this algorithm at the perceived level through area mapping. That means that every point of the physical space will have a corresponding point in the virtual space. Due to the rectangular nature of the physical space, users can choose to enlarge, for example, a rectangular physical space into a pentagonal, hexagonal or even octagonal one. As such, each enlarged space has its own scale of enlargement.

Fig 8. Different shapes of the enlargement mapping.

In order to synchronize the touch of the wall of the room, every wall in the virtual space has the same length with the wall in the physical space. So, when enlarging the physical square room, the pentagonal, hexagonal, heptagonal, octagonal virtual rooms’ correspondence to the magnification ratio is 1.72, 2.598, 3.634, and 4.828. When the scale of enlargement gets bigger than this, the dislocation of the visual between the physical and virtual spaces increases as well, rendering the dislocation quite obvious in the user’s eyes. In our experiments, we found that the enlargement ratio of the hexagon is the most widely acceptable proportion.

In terms of the generating process, the developer can get the area mapping plan by using the size data from the 3D scan. By defining the physical size of each Amplifying Room, the system will automatically generate the corresponding virtual floor plan and its area mapping plan. The system prefab will later take control of the position and rotation of the main camera. The developer can use the test model and debug tool or data information visualizer to adjust the model for further and future development.

4.2.3 The Switch between Two Virtual Rooms.

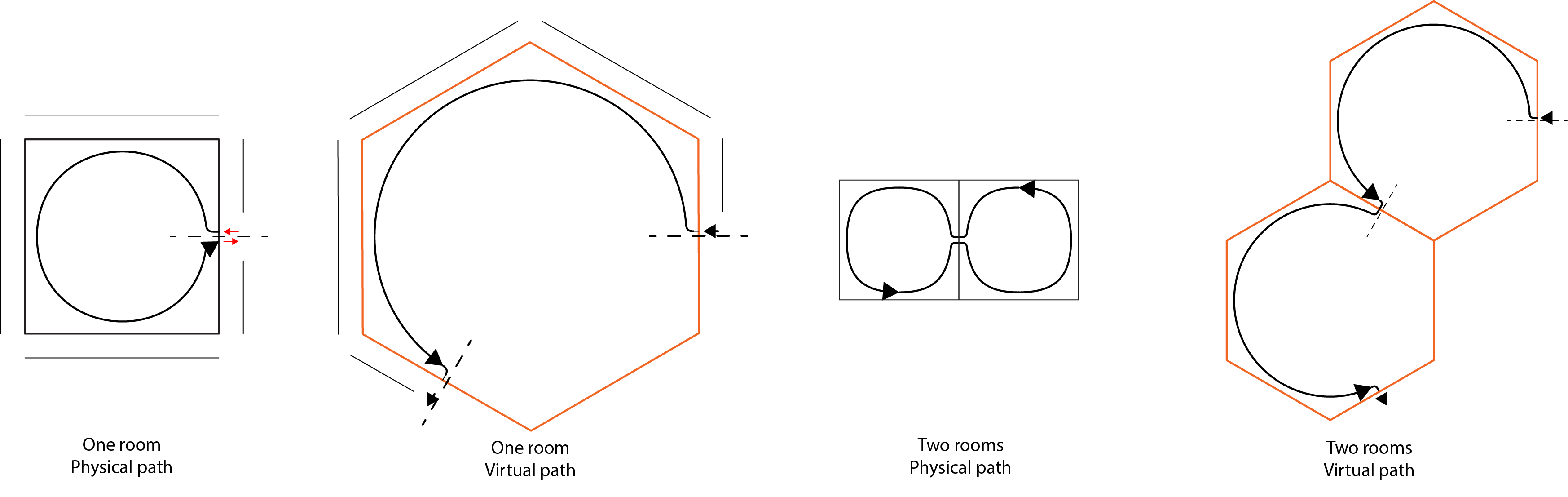

Due to the limited area of the expansion of each individual room, we also considered how to connect different virtual rooms. Because of the fact that the entrance of the virtual space and the entrance of the physical space have a corresponding dislocation, that provides us with the possibility of misleading the user to think that he/she walks in a different virtual room while they actually walk in the same physical one. One could further push the enlargement from expanding one room to expanding a series of different environments.

More specifically, if there are two physical spaces connected by a door, the users can see two different doors in one enlarged virtual room, which means the path is visually dislocated. This phenomenon allows us to enlarge two linked physical spaces into a series of linked virtual spaces.

Fig 9. The dislocation of virtual path and physical path.

For example, two linked square rooms can be enlarged into six consecutive virtual hexagonal spaces that are connected to each other. When users walk through the six virtual spaces, they are actually walking in “8” shape routine in the two physical spaces. Because the process of walking through has the specific demand of the walking direction, we can use multiple stories with walking elevators or electronic doors to the control users’ walking direction.

Multiple stories with walking elevators are using the different mezzanines and one-way elevators to constrain the specific direction of the walking path of the users. If the user wants to walk to the door downstairs at the left-hand side, they will need to walk towards their right-hand side because the elevator for downstairs is on the right-hand side. Each mezzanine will be connected by a specially designed elevator. The movement of the elevator will synchronize with the movement of the user. This kind of design can let the user experience the multiple-storey space while walking on one floor, with the user being able to decide both the walking direction and speed.

Fig 10. Six consecutive virtual hexagonal spaces that are connected to each other.

Using electronic doors is a more direct and easy way to control the walking direction of the user. We can choose whether the doors are open or not to direct the user’s movement and even though this is easier for the user to understand, to a certain degree, it breaks the continence of the spatial experience.

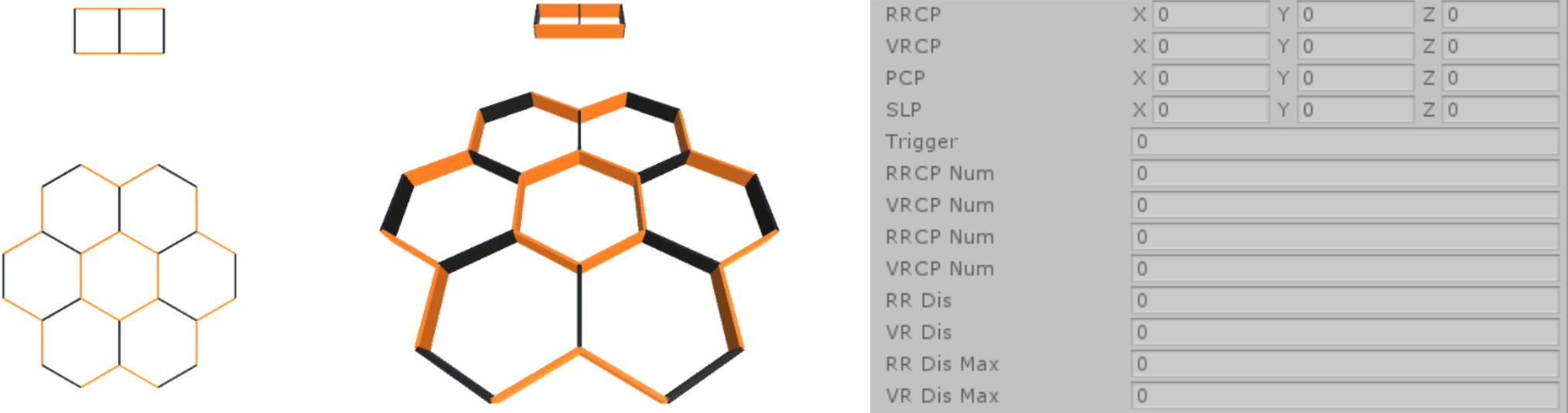

Developers can use the ’Real-World’ Unity prefab to define the size of the physical room and the system will generate the sequence of the virtual rooms automatically. Moreover, the developer can adjust the width of each wall in the inspector panel. These rooms can later be adjusted to different shapes according to the requirement of the spatial design.

4.3 The virtual environment model generation.

After the developer decides how to divide the space and the shape of the virtual space, he/she can input these parameters into the unity development kit for the generation.

Users can choose to let the system automatically generate a simple three-dimensional model, or use the enlarged plan offered by the system to create an electronic model for their own application scenario, using a 3D modeling software, like Rhino. Then, he/she will import it into Unity blinding with the algorithm system. The simple three-dimensional model can be used to test if space fulfills the needs of the design.

Fig 11. The simple 3D virtual environment model generated from system.

It is very important to make sure that the length of the walls in the 3D model and the length of the corresponding material match the wall in the physical space as much as possible. Each wall in the physical space may correspond to multiple walls in the virtual space, so it is crucial to consider the one-to-many relationship so that users can have consistent tactile experiences.

4.4 The spatial and interactive design.

Once the modeling of the virtual space is finished, users can add specific interactive elements to the models which correspond to their own application scenario. When we design these virtual spaces, we should guarantee that every virtual space is visually unique and identifiable, so that when users walk in these spaces, they can perceive the spatial differences and not lose their way. Besides from the visual differences, particular sound characteristics can also be used to enhance the uniqueness of each room.

Technically speaking, the design of this part is realized through Unity or another third-party software and according to its specific needs. As part of the process, we offered as well some supplementary auxiliary tools in the development package, such as noise system, I-system, and other visual packages.

At the interactive level, we developed possibilities for interaction through sounds, hand movements or sight. These possibilities will be mentioned in detail in the paper named “Simulacrum: Giving Characters To Spaces Generated By Redirected Walking”, written by our team member, Liquan Liu, and they also will be displayed in our demonstration scene.

4.5 Synchronization.

In this project, the system aims to provide the users with corresponding haptic experience during walking. It followed the design principle of passive haptics, which means that all the objects can be touch in the virtual space, such as walls and others, users will touch the physical representative instead.

There will be two parts of different synchronizations; the first one is the synchronization of spatial boundaries, i.e. synchronization of the walls. This part will be finished by an algorithm. The second part is synchronization of the interactive object in the space, for example, if you need to place a moveable chair in the space, the chair should be shown in the virtual space at the same time, so that the user can have the synchronization of passive haptic when they touch the chair.

The user should receive full haptic feedback by interacting with the physical object through its virtual proxy. Prior research suggests there are benefits to physical replication of objects in immersive virtual environments, where the use of actual objects significantly increases self-reported solidity and weight, not only of the object touched but also of others in the scene (Insko, 2001).

4.5.1 Object Tracking.

To make objects in the scene to be physically interactive, we tracked the location and rotation of all interactive objects in the scene. Assuming there is only one player, the following section will introduce methods of synchronizing objects into virtual scenes.

4.5.1.1 Track with track marker.

We can use QR marker to track the physical object. By using the front camera of the HMD, the computer can analyze each frame it recorded and identify the location and rotation of the marker to track the physical object.

4.5.1.2 HTC Vive Camera tracker.

This is another method of using a camera tracker that works similarly to the tracking of the HMD. The HTC Vive Tracker allows developers to track the physical object, from ceiling fans and kittens to feet and mobile phones (VIVE Blog, 2017). The developer can easily use the tracker by using the HTC Vive unity development plug-in.

Fig 12. HTC Vive Object Tracker.

4.6 The Evaluation and review of Virtual Environment.

By using the evaluation system to test specific scenes, we provided a data visualization kit in this companion development. This includes trail visualizer and data monitor. They both can visualize and modify the data in real-time. The system can provide information on the monitor display without interfering the image of HMD. Multi-player monitoring will also be added to this kit in the future.

4.6.1 Trail Visualizer.

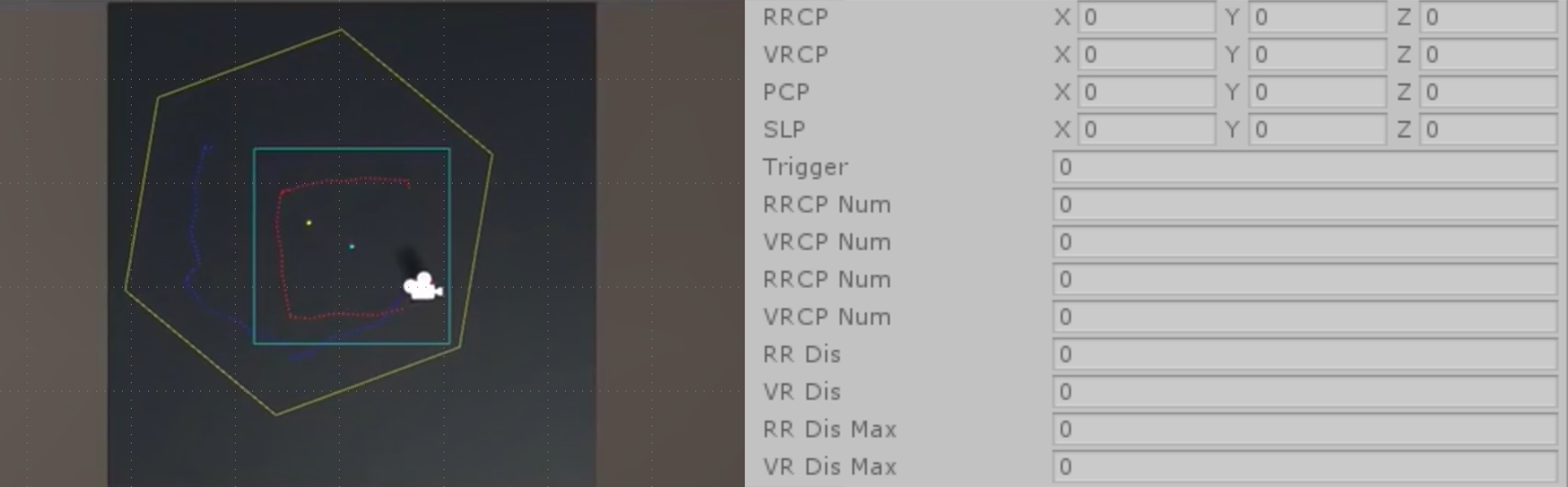

For a better understanding of how this algorithm works, in this kit, we provide different tools to simulate the processing of the redirected walking in the expanded area. The trail visualizer is for a better understanding of how the virtual walking path is different from the physical one. The system will record the whole walking process for each user and visualize it as a standalone diagram which can be seen later on.

In the diagram, one can see that the virtual space boundary is marked with yellow color and the physical boundary with blue. Also, the physical walking path is colored red and the virtual path is white. (Figure 15)

4.6.2 Data Monitor.

Fig 13. The Trail Visualizer demo. &. The data monitor panel in the development kit.

In order to understand the detail data of the running system for debugging, there is a whole panel in the development kit devoted to displaying all the parameters the system used, including the rotation and position of users, the virtual space, the VECP, and VSCP. The developer can edit these parameters while running the game scene. The results will be calculated in real-time, as the perfect way to visually debug while developing the application with this kit.

4.7 Deployment.

This system can be deployed in two different combinations of hardware. For entry-level hardware, we can use Project Tango-equipped devices, like Lenovo Phb2. The advantage of using Tango is the mobility of the equipment. The user can get 6 degrees of freedom to walk without any camera trackers or cable connected to the computer. The disadvantages for this platform are the low-accuracy of the locomotion tracking, lack of hand tracking and the low-quality of the image.

For high-end users, we can use HTC Vive HMD, Vive tracker also combines it with leap motion for the hand tracking. This combination can provide the experience for both rendering and tracking. The maximum tracking area is 4*8 meters, and it set the tracking size of our demo scene.

For a better experience, it is advised to have the same boundary martial and shape in the physical space and the virtual representative, so that when the user touches the virtual environment, the haptic sense can remain the same in the real world.

Fig 14. Physical Wall Built For Sense of Tactile.

5. Experiments and feedback.

Considering the reflection to the actual situation, it is important to mention that, even though everyone has presented the same visuals, each individual reaction and understanding of the semantics may vary. This thesis is, mainly, interested into the variation of: (i). what we perceive to be real, how users reconstruct our visuals in our mind. (ii). what is the unique or personal differences in the same experience and how can we deploy and apply the it as designers?

5.1 2200 Simulacrum: Memory of Earth exhibition.

Fig 15. Architectural Design In Simulacrum 2200.

To showcase what the pipeline of this system offers, we demonstrated integrating redirected walking with the “2200 Simulacrum”, a well-constructed interactive virtual environment designed by our team.

5.1.1 Narrative Background.

2200 simulacrum is an immersive MR experience which back grounded in the far future when people will be living on a newly colonized planet. The exhibition “Memory of the Earth” wishes to remind us of how we used to live on earth.

This exhibition shows The Wu Xing (Chinese: 五行), also known as the ‘Five Elements’, Five Phases, the Five Agents, the Five Movements which is the short form of the five types of ‘chi’ dominating at different times. It is a fivefold conceptual scheme that many traditional Chinese fields used in order to explain a wide array of phenomena (En.wikipedia.org, 2017). By showing this scene not only do we demonstrate the use of our redirected algorithm, but we also explore the possibilities and design principles of interaction in MR.

Fig 16. Elements To Tell The Difference of Rooms.

5.1.2 Experience.

Though reactive algorithms are suitable for free exploration, we leveraged this key feature when we were tasked to fit a near infinite virtual environment in an 8*4 meter tracked space for an MR experience.

There are 6 hexogen virtual rooms forming a loop for the user to explore. Each room has a corresponding interactive room and a one of five-element as a visual cue for the user to identify which room they are exploring. These rooms showcase our idea of the possibility of interaction in VR. For the room connection, we used the multiple stories and elevators solution for the user walking direction control.

Fig 17. The demo enlargement of Physical Space.

Each space has its particular characteristic for the user to identify through the human spatial memory that is sensitive to geometric manipulations of the environment (Hartley, Trinkler and Burgess, 2004). Therefore, a key element for the viewer to sense that he /she is walking in a much larger space is the assessment that takes place in their memory of the space.

5.2 Feedback.

One of the most practical concerns for end users is the amount of space required in order to successfully use redirection. This thesis used several different indicators to quantify the users’ acceptance of the algorithm. The first one is, if the users can describe the path of the walking accurately after they experience it; the second one is, how much time will it take the users to forget their location in physical space after they enter the virtual scene; the third one is to test if the users can figure out their locations in virtual space and also accurately walk to specific locations or return via their original path.

Fig 18. Demo Experience To The Public.

In addition to the above quantitative indicators, we also let the users give scores in order to know their subjective evaluation of the experience. The projects the users have to evaluate are visuals, feelings, the degree of dizziness or deformation, and the degree to which their ability to balance is influenced when in and after the experience of the virtual space.

Fig 19. Sketches Drawn By Viewers About Their Spatial Memories.

After analyzing the data collected from users, the feedback was quite optimistic. In our sample of 15 people, over 65% can describe their virtual path in an accurate way. Over 85% said they cannot locate themselves in the physical world after 3mins in the experience and only 38% can navigate themselves back to the original point.

In terms of the subjective evaluation of the experience, 40% of the people felt some degree of dizziness, 60% believed that the experience affected their balance, yet it is still hard to identify whether this is caused by the algorithm or the latency of the visual image.

6. Conclusion.

What we perceive to be real, is a reconstruction in our minds (Akten, 2017). The potential provides by MR is far from fully explored. This algorithm and development kit have been designed as the basic language in the MR design field, in order to make the most of available of physical space, while it could potentially be applied with success to other fields such as, exhibition design, gaming, scene graph, education, remote tourism, official business etc. From a methodological point of view, the application of the algorithm has achieved its initial and desired goal; to allow the users to walk freely in a perceptively enlarged physical space that entails the tactility of the corresponding virtual objects.

In spite of the fact that there are some limitations in the process that might include the site’s minimum area requirement, the restriction of walking directions in multiple rooms, the users’ physical acceptance of algorithm etc., this thesis showcases the great potential for the algorithm’s practical application in a diversity of scenarios that cannot be neglected. It speculates that the future development and improvement of the algorithm can surpass existing flaws to offer a complete set of an intelligent development kit to be implemented in a variety of design fields. To this end, the author will be optimizing the design application process of the algorithm, while committing to promoting such a practice in architecture and beyond.

During experimentations that took place while designing and testing the algorithm, problems related to psychological and neurological conditions were brought to the surface that could allow for further investigations to take place. For example, the user’s exposure to inconsistent situations, the proprioception, and visual signals have raised interesting questions that look for answers. What if the users could be aware of the differences and inconsistencies? Or, how important is the visual signal play in the self-location in a locomotion? These are just some of the questions that could give room to further development of such a challenging and promising research agenda in the near future.

Team members: Junxi Peng/ Liquan Liu /Jiasheng Huang with support/direction from Ruairi Glynn.

Project Page | Interactive Architecture

7. Reference.

Ahres, A. (2017). How to get into the Virtual Reality world. [online] Ahmed ahres. Available at: http://www.ahmedahres.com/blog/how-to-get-into-the-virtual-reality-world [Accessed 13 Jul. 2017].

Akten, M. (2017). FIGHT! Virtual Reality Binocular Rivalry (VRBR). – Memo Akten – Medium. [online] Medium. Available at: https://medium.com/@memoakten/fight-virtual-reality-binocular-rivalry-89a0a91c2274 [Accessed 13 Jul. 2017].

Azmandian, M., Grechkin, T., Bolas, M. and Suma, E. (2016). The Redirected Walking Toolkit: A Unified Development and Deployment Platform for Exploring Large Virtual Environments. IEEE VR, (2016).

Begley, S. (2009). The plastic mind. Constable.

Bellan, V., Gilpin, H., Stanton, T., Newport, R., Gallace, A. and Moseley, G. (2015). Untangling visual and proprioceptive contributions to hand localisation over time. Experimental Brain Research, 233(6), pp.1689-1701.

Facebook for Developers. (2017). Facebook for Developers – F8 2017 Keynote. [online] Available at: https://developers.facebook.com/videos/f8-2017/f8-2017-keynote/ [Accessed 13 Jul. 2017].

Faro.com. (2017). 3D Laser Scanner FARO Focus – 3D Surveying – Overview. [online] Available at: http://www.faro.com/products/3d-surveying/laser-scanner-faro-focus-3d/overview [Accessed 13 Jul. 2017].

Frearson, A. (2017). Augmented reality will change the way architects work says Greg Lynn. [online] Dezeen. Available at: https://www.dezeen.com/2016/08/03/microsoft-hololens-greg-lynn-augmented-realityarchitecture-us-pavilion-venice-architecture-biennale-2016/ [Accessed 13 Jul. 2017].

Frissen, I. and Campos, J. (2011). Integration of vestibular and proprioceptive signals for spatial updating.

Hartley, T., Trinkler, I. and Burgess, N. (2004). Geometric determinants of human spatial memory.

Insko, B. (2001). Passive Haptics Significantly Enhances Virtual Environments.

Klatzky, R. (1998). Allocentric and egocentric spatial representations: Definitions, distinctions, and interconnections. Spatial cognition, pp.1-17.

Klatzky, R., Loomis, J., Beall, A., Chance, S. and Golledge, R. (1998). Spatial Updating of Self-Position and Orientation During Real, Imagined, and Virtual Locomotion. Psychological Science, 9(4), pp.293-298.

Lotto, B. (2017). Optical illusions show how we see. [online] Ted.com. Available at: https://www.ted.com/talks/beau_lotto_optical_illusions_show_how_we_see [Accessed 13 Jul. 2017].

Lindeman, R., Sibert, J. & Hahn, J. (1999). Hand-Held Windows: Towards Effective 2D Interaction in Immersive Virtual Environments. IEEE Virtual Reality.

VIVE Blog. (2017). New Project Code and Tutorials Released for VIVE Tracker – VIVE Blog. [online] Available at: https://blog.vive.com/us/2017/04/19/new-project-code-and-tutorials-released-for-vive-tracker/ [Accessed 14 Jul. 2017].

Razzaque, S., Kohn, Z. and Whitton, M. (2001). Redirected Walking. Technical Report.

Artandpopularculture.com. (2017). Simulacrum – The Art and Popular Culture Encyclopedia. [online] Available at: http://www.artandpopularculture.com/Simulacrum [Accessed 13 Jul. 2017].

Wang, X. and Schnabel, M. (2010). Mixed reality in architecture, design and construction. [Netherlands]: Springer, p.4.

En.wikipedia.org. (2017). Wu Xing. [online] Available at: https://en.wikipedia.org/wiki/Wu_Xing [Accessed 14 Jul. 2017].